Projects

A project consists of work that follows a single set of instructions and is tackled by experts with the same specialization. If the instructions change significantly, it’s usually best to treat that as a new project. Each expert must pass that project’s benchmark tasks before working on production tasks. It’s common for clients to run multiple projects at once, especially if they need different specialties, because every project requires its own dedicated pods.Tasks

A task is a set of prompt-response pairs ready for annotation. Each task contains a template and immutable data that specifies how it will be displayed to experts, but the actual annotations are stored independently of the task data.Task Lifecycle

As an important rule, every stage of a task should be easy to audit and data on the platform should be immutable. This is done by having each task actually being called a “unit”. What you think of a task is actually a unit, and each annotatable stage in the lifecycle or pipeline is a task. Whenever an expert creates a new stage in the pipeline (for example, a review or an additional annotation), the platform records it without altering the previous stage, ensuring a complete audit trail. This also allows a unit to return to an earlier stage, in case a review layer rejects the work and requests a do-over or for experts to address feedback.Task Claims

When an expert chooses (or is assigned) a task to work on, we call that a task claim. Each claim represents one expert taking responsibility for completing or reviewing a specific step in the pipeline. Claims help us track accountability and progress at every point in the lifecycle. Our platform prevents multiple users from claiming the same task. In case a unit goes to an earlier stage, such as a reviewer requesting changes to be made, the unit contains information about which expert worked on that stage, and the scheduler can prioritize assigning the task to them.Pod Management

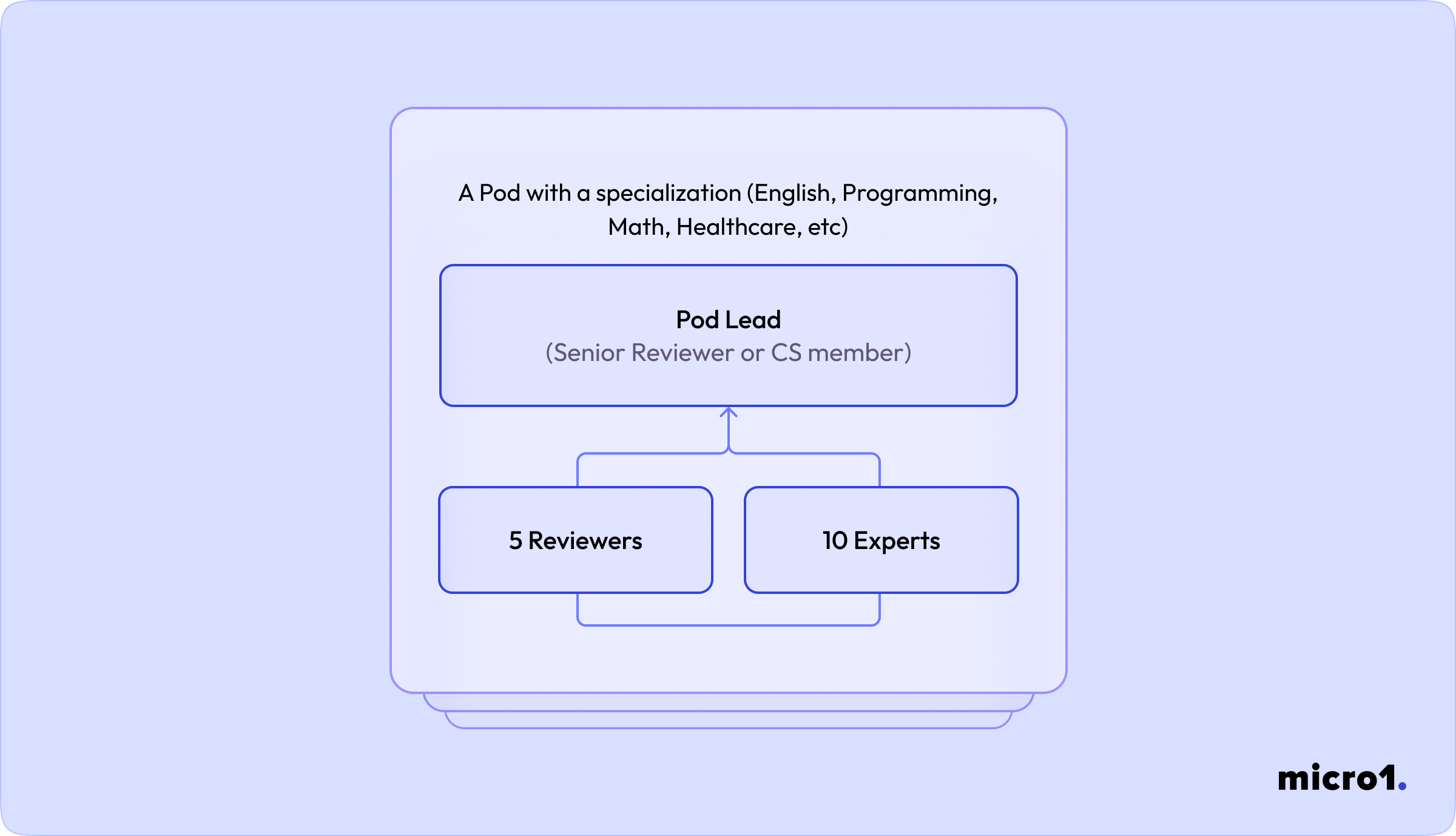

At micro1, we use a team pod structure to manage experts effectively. A typical pod includes:- 10 experts

- 5 reviewers

- 1 pod lead (often a senior reviewer or a member of the Client Success team)

Bonuses

If a project is behind schedule, offering bonuses is often seen as a good way to motivate experts to prioritize the work, often even working on weekends. However, at micro1, we believe this approach should be considered carefully, as it may have some unintended consequences. There are two common bonus structures:- Linear bonuses: A flat amount added per task completed.

- Exponential bonuses: Increasing bonus tiers based on the total number of tasks completed in a set period (e.g., $5 per task for the first 10 tasks, then $15 per task up to 20 tasks, etc.).