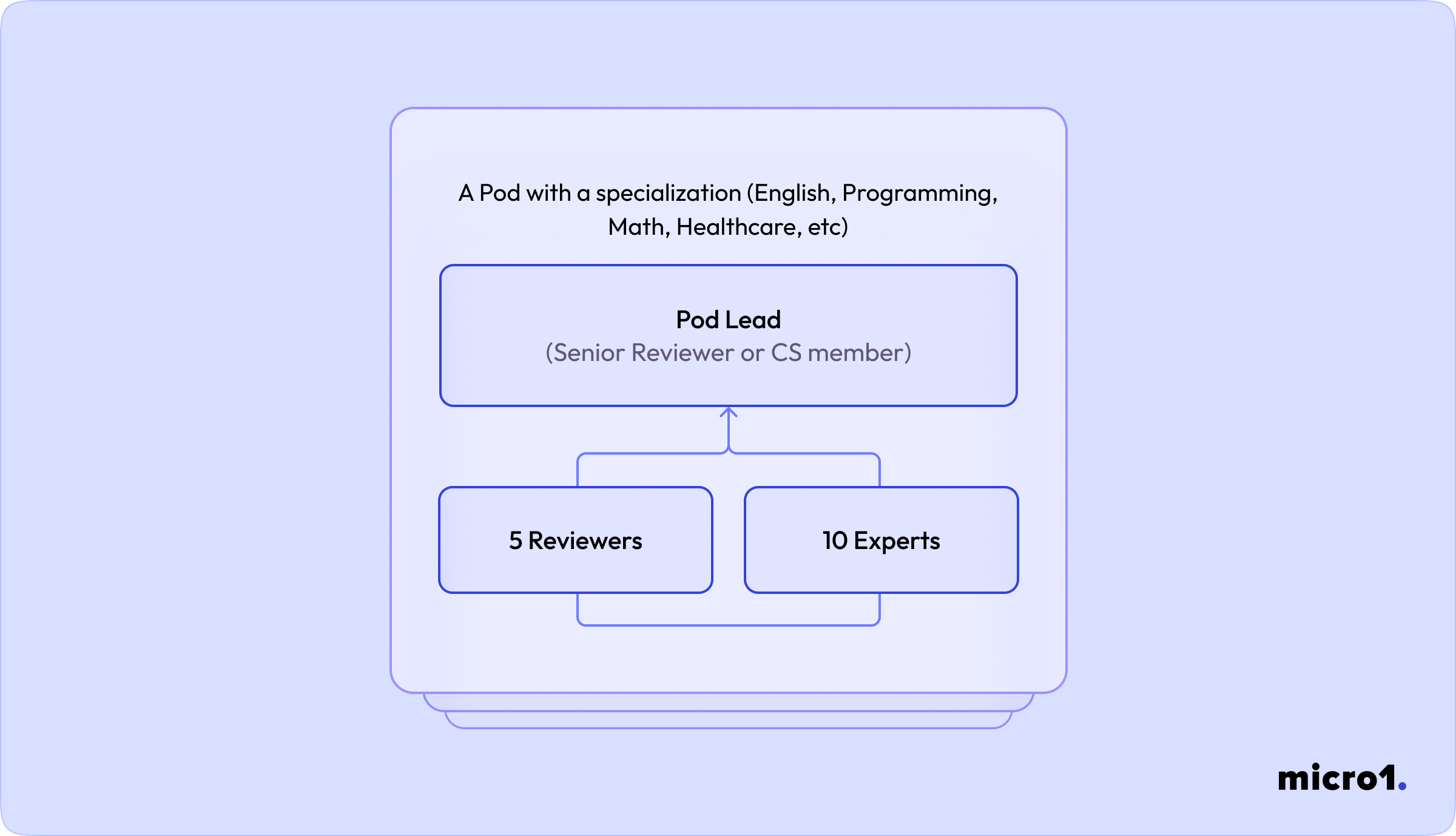

- People know who their manager is.

- Pod Leads feel responsible for upskilling their experts.

- Experts feel more comfortable asking for clarification within their pods.

- Since everyone in the pod has the same specialization, pods can stay together between projects.

- Experts can take feedback from their pod’s reviewers more constructively.