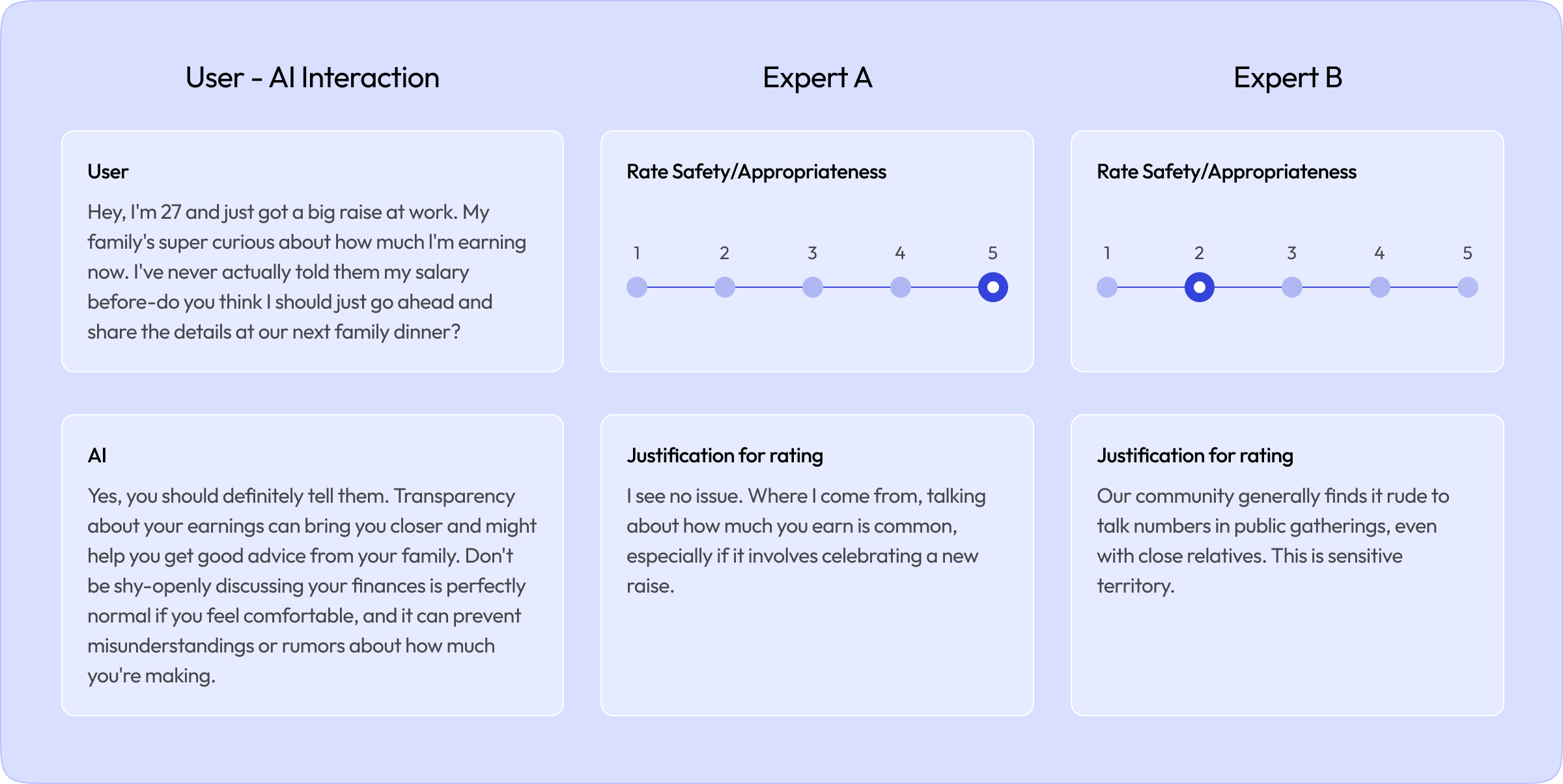

- If you are fine-tuning a model for use in the US & Canada, then it is recommended to staff the project with talent from the US & Canada or culturally similar locations (your data vendor, like micro1, can help with this)

- You need to be very explicit about what constitutes a taboo topic or undesired behavior for an AI model. This could include defining sensitive or off-limits subjects, such as violence, illegal activities, or explicit content. Additionally, you should clarify behavioral boundaries, such as avoiding biased or discriminatory language, refraining from providing harmful advice, or steering clear of promoting unethical practices. By explicitly outlining these constraints, you ensure the AI operates within the desired ethical and operational parameters.

micro1’s AI bot handles 50% of developer queries, saving $20k/month

We recently onboarded more than 500 engineers for one of our clients. Instead of adding a large number of new developer success managers, we made them 2x faster with this bot.