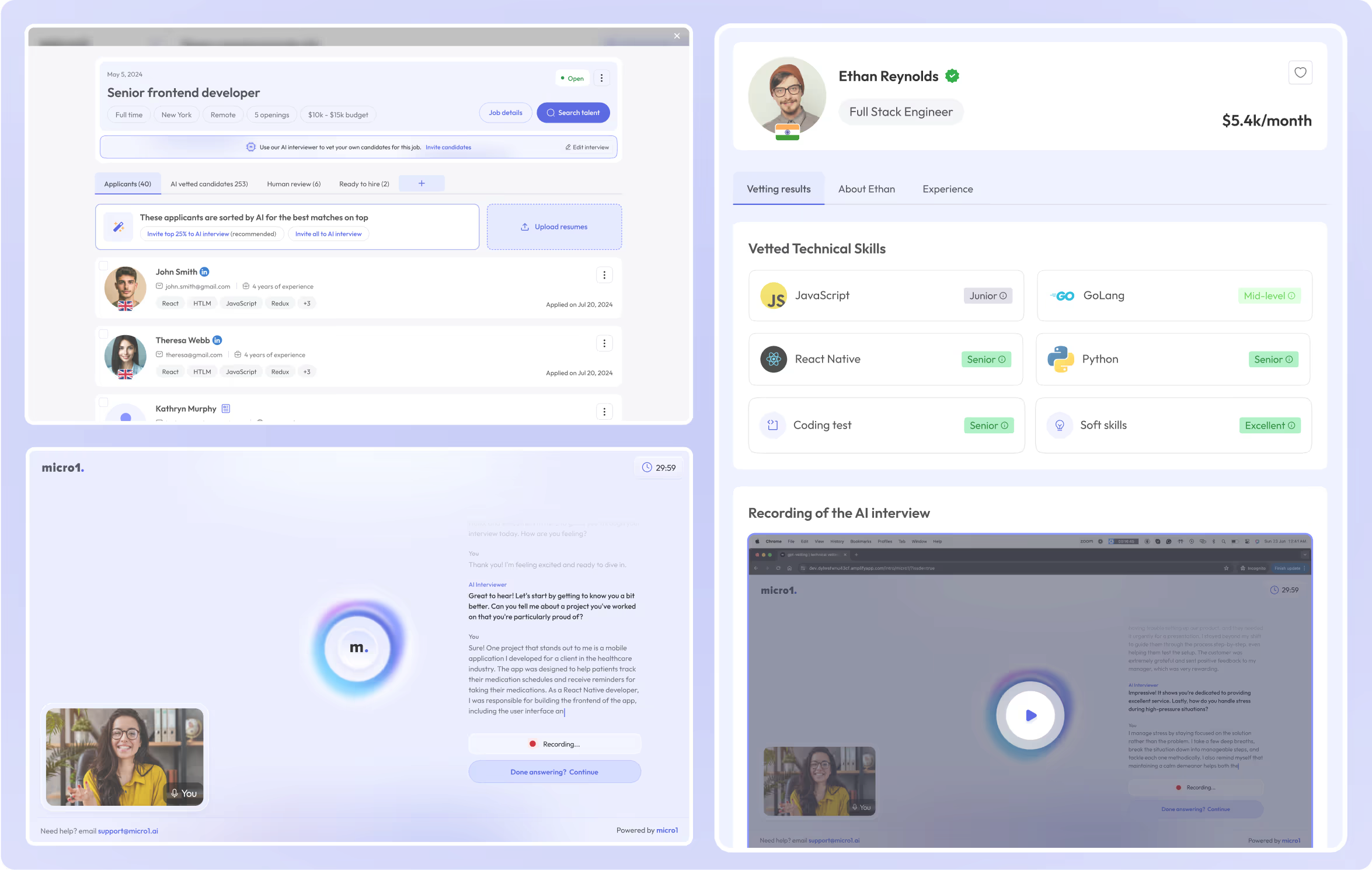

micro1 immediately matches open job requirements with the 50,000+ experts in our talent pool and posts openings on platforms like LinkedIn, X jobs and their international equivalents when needed. Applicants are screened with our AI Interviewer and a report is generated for our recruiters to review, all on autopilot.

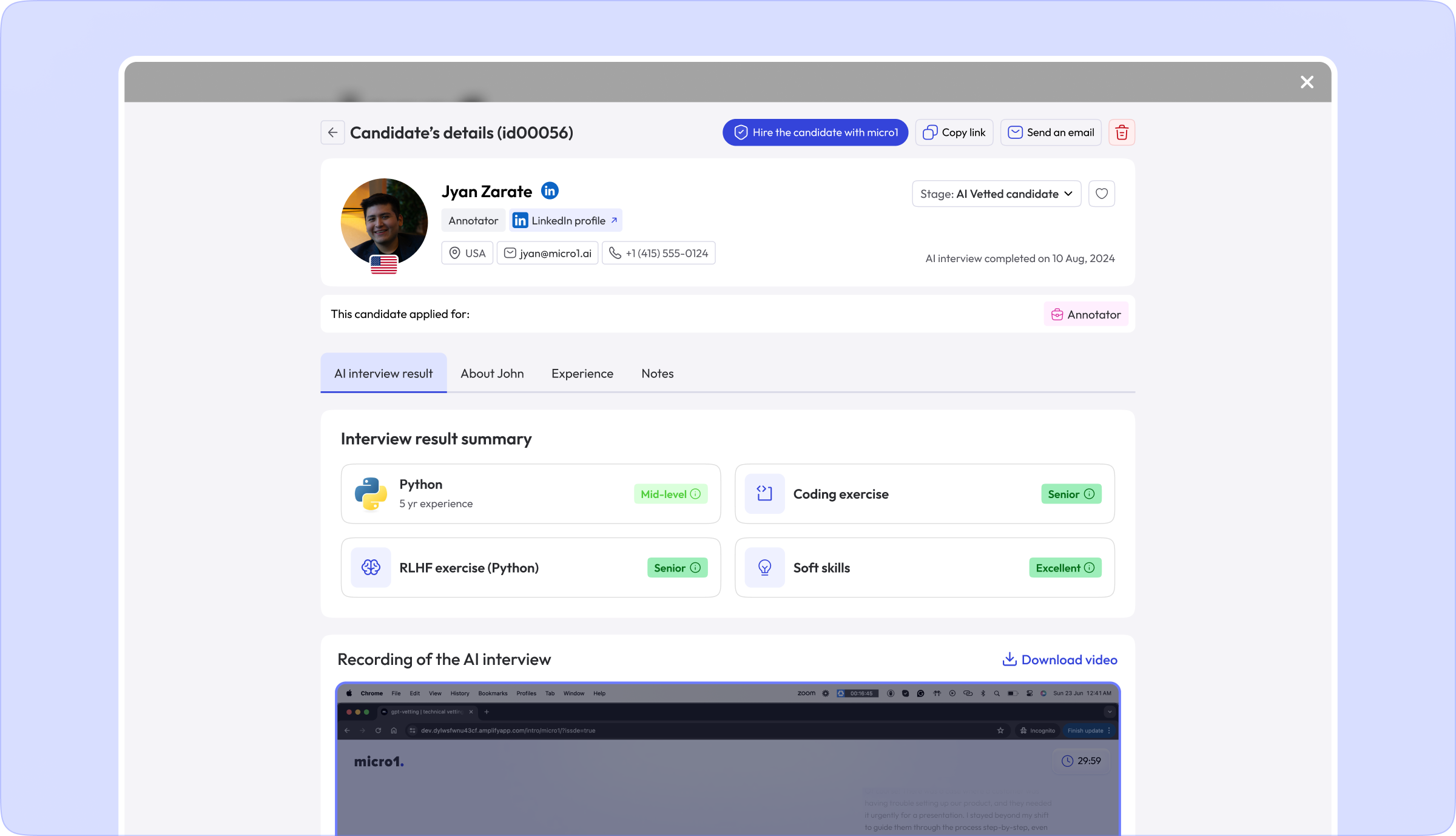

An example of an AI Interview report. Here our recruiters can open the exercise and go in-depth into the candidate’s answers inside the human data exercise.

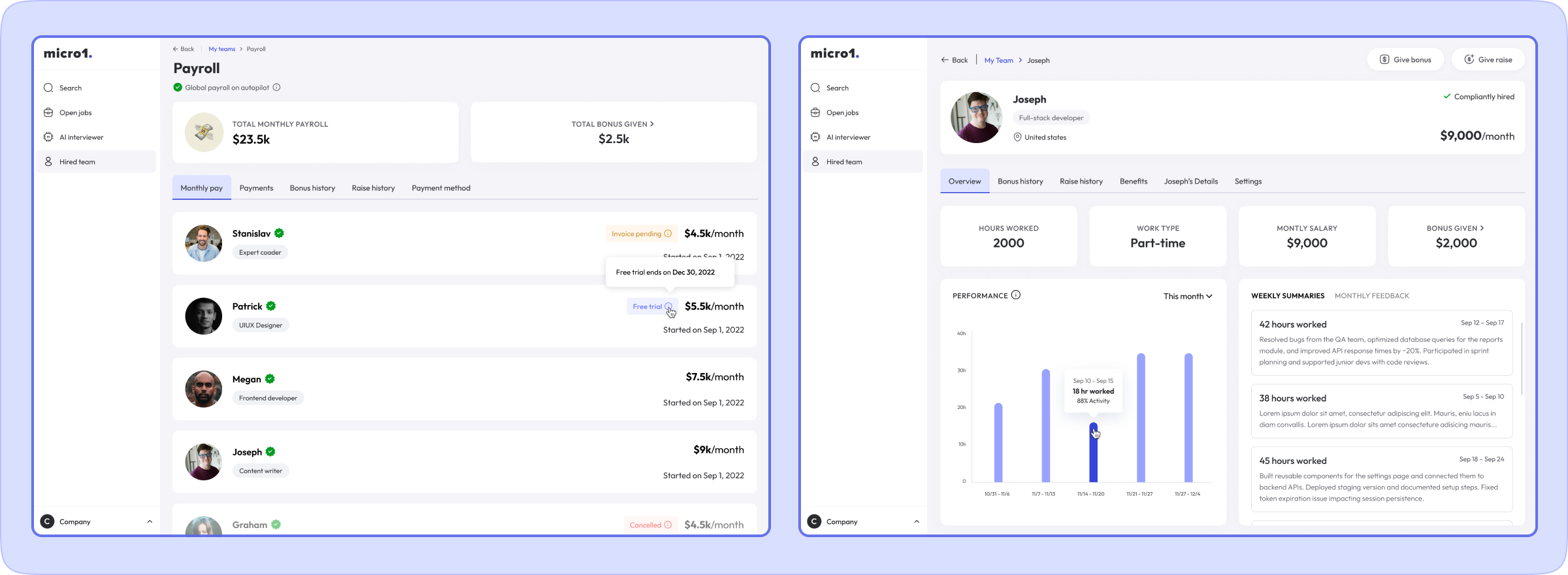

Here is an example of the payroll, talent dashboard, and performance management dashboard that is created once an expert has been hired and onboarded onto a project.